“Hear me calling, hear me calling loud,

If you don’t come soon, I’ll be wearing a shroud.” – Ten Years After (1969)

Introduction

Today marks the tenth anniversary of my involvement with Open Data in Scotland. As I wrote here, back in 2009-2010 I’d been following the work that Chris Taggart and others were doing with open data, and was inspired by them to create what I now believe to have been the first open data published in the public sector in Scotland.

This piece is a reflection of my own views. These views may be the same as those held by colleagues at Code The City or indeed on the civic side of the Open Government Partnership. I’ve not specifically asked other individuals in either group.

While my involvement in, and championing of, open data in Scotland is now a decade long, my enthusiasm for the subject and in the the social and economic benefits it can deliver, is undiminished by my leaving the public sector in 2017 after thirty four years. In fact the opposite is true: the more I am involved in the OD movement, and study what is being achieved beyond Scotland’s narrow borders, the more I am convinced that we are a country intent on squandering a rich opportunity, regardless of our politicians’ public pronouncements.

But the journey has not been easy. primarily due to a lack of direction from Scottish Government and little commitment, resource or engagement at all levels of public service. A friend who reviewed this blog post suggested that I should replace the picture of a birthday cake (above) with one of a naked human back bearing bleeding scars from the our battles. He’s right – it is STILL a battle ten years on.

It is not as if the position in Scotland is getting better. We are moving at a glacial pace. The gap between Scotland and other countries in this regard is widening. I gave a talk earlier this year in which I showed assessments of Scotland, Romania and Kenya’s performance in Open Government (source: https://www.opengovpartnership.org/campaigns/global-report/ Vol 2) and asked the audience to identify which was Scotland.

Question: Which is Scotland? (Answer)

Economic opportunities

In February 2020 the European Data Portal published a report – The Economic Impact of Open Data – which sets out a clear economic case for open data. That paper looks at 15 previous studies between 1999 and 2020 which have examined at the market size of open data at national and international levels, measured in terms of GDP of each study’s geographical area.

Taking the average and median values from those reports (1.33% and 1.19% respectively) and an estimated GDP for Scotland (2018) of £170.4bn we can see that the missed opportunity for Scotland is of the order of £2.027bn to £2.266bn per annum. What is the actual value of the local market created by Scottish-created open data? if pushed for a figure I would estimate that it is currently worth a few hundred thousand pounds per annum, and no more. Quite a gap!

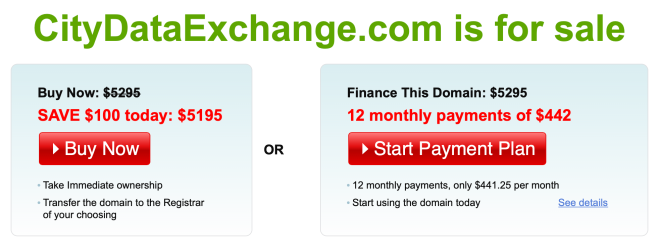

Meantime we have the usual suspect of consultants whispering sweetly in the ears of ministers, senior civil servants and council bosses that we should be monetising data, creating markets, selling it. There will be no mention, I suspect of the heavily-subsidised, private sector led, yet failed Copenhagen Data Exchange, I suspect. (Maybe they can make a few bob back selling the domain name! )

While this commercial approach to data may plug small gaps in annual funding for Scotland, and line the pockets of some big companies in the process, it won’t deliver the financial benefits at a national level of anything like the figures suggested by that EU Data Portal report but it will, in the process, actively hamper innovation and inhibit societal benefits.

I hear lots of institutions saying “we need to sell data” or “we need to sell access rights to these photos” or similar. Yet, in so many cases, the operation of the mechanisms of control; the staffing, administration, payment processing etc. far outstrips any generated income. When I challenged ex colleagues in local government about this behaviour their response was “but our managers want to see an income line” to which we could add “no matter how much it is costing us.” And this tweet from The Ferret on Tuesday of this week is another excellent example of this!

I have also heard lots of political proclamations of “open and transparent” government in Scotland since 2014. Yet most of the evidence points in exactly the opposite direction. Don’t forget, when Covid 19 struck, Scotland’s government was reportedly the only political administration apart from Bolsonaro’s far right one in Brazil to use the opportunity to limit Freedom of Information.

Openness, really?

It is clear that there is little or no commitment to open data in any meaningful way at a Scottish Government level, in local authorities, or among national agencies. This is not to say that there aren’t civil servants who are doing their best, often fighting against political or senior administration’s actions. Public declarations are rarely matched by delivery of anything of substance and conversations with people in those agencies (of which I have had many) paints a grim picture of political masters saying one thing and doing another, of senior management not backing up public statements of intent with the necessary resource commitment and, on more than occasion, suggestions of bad actors actually going against what is official policy.

I mention below that I joined the Open Government Partnership late in 2019. Initially I was enthusiastic about what we might achieve. While there are civil servants working dedicatedly on open government who want to make it work, I am unconvinced about political commitment to it. We really need to get some positive and practical demonstration that Scottish Government are behind us – otherwise I and the other civil society representatives are just assisting in an open-washing exercise.

In my view (and that of others) the press in Scotland does not provide adequate scrutiny and challenge of government. We have a remarkably ineffective political opposition. We also have a network of agencies and quangos which are reliant on the Scottish Government for funding who are unwilling to push back. All of this gives the political side a free pass to spout encouraging words of “open and transparent” yet do the minimum at all times.

We may have an existing Open Data Strategy for Scotland (2015) stating that Scotland’s data is “open by default”, yet my 2019 calculation was that over 95% of the data that could and should be open was still locked up. And there is little movement on fixing that.

We have many examples of agencies doing one thing and saying another, such as Scottish Enterprise extolling the virtues of Open Data yet producing none. Its one API has been broken for many months, I am told.

My good friends at The Data Lab do amazing work on funding MSc and Phd places, and providing funding for industrial research in the application of data science. Their mission is “to help Scotland maximise value from data …” yet they currently offer no guidance on open data, no targeted programme of support, no championing of open data at all, despite the widely-accepted economic advantages which it can deliver. There is the potential for The Data Lab to lead on how Scotland makes the most of open data and to guide government thinking on this!

All of this is not to pick on specific organisations, or hard working and dedicated employees within them. But it does highlight systemic failures in Scotland from the top of government downwards.

Fixing this is an enormous task: one which can only be done by the development of a fresh strategy for open data in Scotland, which is mandated for all public sector bodies, is funded as an investment (recognising the economic potential), and which is rigorously monitored and enforced.

I could go on…. but let’s look at this year’s survey.

(skip to summary)

Another year with what to show for it?

In February 2019 I conducted a survey of the state of open data in Scotland. It didn’t paint an encouraging picture. The data behind that survey has been preserved here. A year on, I started thinking about repeating the review.

In the intervening year I’d been involved in quite a bit activity around open data. I had

- joined the civic side of the Open Government group for Scotland and was asked to lead for the next iteration of the plan on Commitment Three (sharing information and data) ,

- joined the steering group of Stirling University’s research project, Data Commons Scotland,

- trained as a trainer for Wikimedia UK, delivering training in Wikidata, Wikipedia and Wiki Commons, and running multiple sessions for Code The City with a focus on Wikidata,

- created an open Slack Group for the open data community in Scotland to engage with one another,

- created an Open Data Scotland twitter account which has gained almost 500 followers, and

- initiated the first Scottish Open Data Unconference (SODU) 2020 which had been scheduled to take place as a physical event in March this year. That has now been reconfigured as an online unconference which will happen on 5th and 6th September 2020.

In restarting this year’s review of open data publishing in Scotland my aims were to see what had changed in the intervening 12 months and to increase the coverage of the survey: going broader and deeper and developing an even more accurate picture. That work spilled into March at which point Covid-19 struck. During lockdown I was distracted by various pieces of work. It wasn’t until August, and with a growing sense of the imminence of this 10-year anniversary, that I was galvanised to finish that review.

I am conscious that the methodology employed here is not the cleverest – one person counting only the numbers of datasets produced. This is something I return to later.

The picture in 2020

I broke the review down into sectoral groupings to make it more managable to conduct. By sticking to that I hope to make this overview more readable. The updated Git Hub repo in which I noted my findings is available publicly, and I encourage anyone who spots errors or omissions to make a pull request to correct them. Each heading below has a link to the Github page for the research.

Overall there is little significant positive change. This is one factor which gives rise to concerns about government’s commitment to openness generally and open data specifically; and to a growing cynicism in the civic community about where we go from here.

Local Government

(Source data here)

I reviewed this area in February 2020 and rechecked it in August. Sadly there has been no significant change in the publication of open data by local government in the eighteen months since I last reviewed this. More than a third of councils (13 out of a total of 32) still make no open data provision.

While the big gain is that Renrewshire Council have launched a new data portal with over fifty datasets, most councils have shown little or no change.

Sadly the Highland Council portal, procured as part of the Scottish Cities Alliance’s Data Cluster programme at £10,000’s cost, has vanished. I dont think it ever saw a dataset being added to it. Searching Highland Council’s website for open data finds nothing.

While big numbers of data sets don’t mean much by themselves, the City of Edinburgh Council has a mighty 236 datasets. Brilliant! BUT … none of them are remotely current. The last update to any of them was September 2019. Over 90% of them haven’t been updated since 2016 or earlier.

Similarly Glasgow, which has 95 datasets listed have a portal which is repeatedly offline for days at a time. A portal which won’t load is useless.

Dundee, Perth and Stirling continue to do well. Their offerings are growing and they demonstrate commitment to the long-haul.

Aberdeen launched a portal, more than three years in the planning, populated it with 16 datasets and immediately let their open data officer leave at the end of a short-term contract. Some of their datasets are interesting and useful – but there was no consultation with the local data community about what they would find useful, or deliver benefits locally; all despite multiple invitations from me to interact with that community at the local data meet-ups which I was running in the city.

It was hoped that the programme under the Scottish Cities alliance would yield uniform datasets, prioritised across all seven Scottish Cities, but there is no sign of that happening, sadly. So what you find on all portals or platforms is pretty much a pot-luck draw.

Where common standards exist – such as the 360 Giving standard for the publication of support for charities – organisations should be universally adopting these. Yet this is only used by two of 32 authorities, all of whom have grant-making services. Surely, during a pandemic especially, it would be advantageous to funders and recipients to know who is funding which body to deliver what project?

Councils – Open Government Licence and RPSI

This is a slight aside from the publication of open data, but an important one. If the Scottish Authorities were to adopt an OGL approach to the publication of data and information on their website (as both the Scottish Government’s core site and the Information Commissioner for Scotland do) then we would be able to at least reuse data obtained from those sites. This is not a replacement for publishing proper open data but it would be a tiny step forward.

The table below (source and review data here) shows the current permissions to reuse the content of Scottish Local Authorities’ websites. Many are lacking in clarity, have messy wording, are vague or misunderstand terminologies. They also, in the main, ignore legislation on fair re-use.

Open Government Licence

The Scottish Government’s own site is excellent and clear: permitting all content except logos to be be reused under the Open Government Licence. This is not true for local authorities. At present only Falkirk and Orkney Councils – two of the smaller ones – allow, and promote OGL re-use of content. There is no good reason why all of the public sector, including local government, should not be compelled to adopt the terms of OGL.

Re-use of Public Sector Information (RPSI) Regulations

Since 2015 the public sector has been obliged by the RPSI Regulations to permit reasonable reuse of information held by local authorities. So, even if Scottish LAs have not yet adopted OGL for all website content, they should have been making it clear for the last five years how a citizen can re-use their data and information from their website.

In my latest trawl through the T&Cs and Copyright Statements of 32 Scottish Local Authorities, I found only 7 referencing RPSI rights there, with 25 not doing so (see the full table above). I am fairly sure that these authorities are breaking the legal obligation on public bodies to provide that information.

Finally, given the presence of COSLA on the Open Government Scotland steering group, the situation with no open data; poor, missing or outdated data; and OGL and PRSI issues needs to be raised there and some reassurance sought that they will work with their member organisations to fix these issues.

Health

(Source data here)

The NHS Scotland Open Data platform continues to be developed as a very useful resource. The number of datasets there has more than doubled since last year (from 26 to 73).

None of the fourteen Health Boards publish their own open data beyond what is on the NHS Scotland portal.

Only one of the thirty Health and Social Care Partnerships (HSCPs) publish anything resembling open data: Angus HSCP.

COVID-19 and open data

While we are on health, I’ve wrote (here and here) early in the pandemic about the need for open data to help the better public understanding of the situation, and stimulate innovative responses to the crisis. The statistics team at Scottish Government responded well to this and we’ve started to develop a good relationship. I’ve not followed that up with a retrospective about what did happen. Perhaps I will in time.

It was clear that the need for open data in CV19 situation caught government and health sector napping. The response was slower than it should have been and patchy, and there are still gaps. People find it difficult to locate data when it is on muliple platforms, spread across Scots Govt, Health and NRS. That is, in a microcosm, one of the real challenges of OD in Scotland.

With an open Slack group for Open Data Scotland there is a direct channel that data providers could use to engage the open data community on their plans and proposals. They could also to sound out what data analysts and dataviz specialists would find useful. That opportunity was not taken during the Covid crisis, and while I was OK in the short term with being used as a human conduit to that group, it was neither efficient nor sustainable. My hope is that post SODU 2020, and as the next iteration of the Open Gov Scotland plan comes together we will see better, more frequent, direct engagement with the data community on the outside of Government, and a more porous border altogether.

Further and Higher Education

(Source data here)

There is no significant change across the sector in the past 18 months. The vast majority of institutions make no provision of open data. Some have vague plans, many of them historic – going back four years or more – and not acted on.

Lumping Universities and Colleges together, one might expect at a minimum properly structured and licensed open data from every institution on :

- courses

- modules

- events

- performance (perhaps some of this is on HESA and SFC sites?)

- physical assets

- environmental performance

- KPI targets and achievements etc.

Of course, there is none of that.

Universities and colleges

I reviewed open data provision of Universities and Colleges around 17 February 2020. I revisited this on 11 August 2020, making minor changes to the numbers of data sets found.

While five of fifteen universities are publishing increasing amounts of data in relation to research projects, most of which are on a CC-0 or other open basis, there continues to be a very limited amount of real operational open data across the sector with loads of promises and statements of intent, some going back several years.

The Higher Education Statistics Agency publishes a range of potentially useful-looking Open Data under a CC-BY-4.0 licence. This is data about insitutions, course, students etc – and not data published by the institutions themselves. But I could identify none of that. Overall, this was very disappointing.

Further, while there are 20 FE colleges. None produces anything that might be classed as open data. A few have anything beyond vague statement of intent. Perhaps City of Glasgow College not only comes closest, but does link to some sources of info and data.

The Crighton Observatory

While doing all of this, I was reminded of the Crighton Institute’s Regional Observatory which was announced to loud fanfares in 2013 and appears to have quietly been shut down in 2017. Two of the team involved say in their Linked In profiles that they left at the end of the project. Even the domain name to which articles point is now up for grabs (Feb 2020).

It now appears (Aug 2020) that the total initial budget for the project was >£1.1m. Given that the purpose of the observatory was to amass a great deal of open data, I have also attempted to find out where the data is that it collected and where the knowledge and learning arising from the project has been published for posterity? I can’t locate it. This FOI request may help. The big question: what benefits did the £1.1m+ deliver?

Scottish Parliament

(Source data here).

In February 2019 I found that The Scottish Parliament had released 121 data sets. This covers motions, petitions, Bills, petitions and other procedural data, and is very interesting. This year we find that they have still 121 data sets, so, there are no new data sources.

In fact that number is misleading. In February 2020 I discovered that while 75 of these have been updated with new data, the remaining 46 (marked BETA) no longer work. As of August 2020 this is still the case. Why not fix them, or at worst clear them out to simplfy the finadbility of working data?

Some of these BETA datasets should contain potentially more interesting / useful data e.g. Register of Members Interests but just don’t work. Returning: [“{message: ‘Data is presently unavailable’}”]

I didn’t note the availability of APIs last year, but there are 186 API calls available. Many of these are year-specific. I tested half a dozen and about a third of those returned error messages. I suspect some of these align with the non-functioning historic BETAs.

Sadly the issues raised a year ago about the lack of clarity of the licensing of the data is unchanged. To find the licence, you have to go to Notes > Policy on Use of SPCB Copyright Material. Following the first link there (to a PDF) you see that you have to add “Contains information licenced under the Scottish Parliament Copyright Licence.” to anything you make with it, which is OK. But if you go to the second link “Scottish Parliament Copyright Licence” (another PDF) the wording (slightly) contradicts that obligation. It then has a chunk about OGL but says, “This Scottish Parliament Licence is aligned with OGLv3.0” whatever that means. Why not just license all of the data under OGL? I can’t see what they are trying to do.

Scottish Government

(Source data here)

Trying to work out the business units within the structure of Scottish Government is a significant challenge in itself. Attempting to then establish which have published open data, and what those data sets are, and how they are licensed, is almost an impossible task. If my checking, and arithmetic are right, then of 147 discrete business units, only 27 have published any open data and 120 have published none.

So we can say with some confidence that the issue with findability of data raised in Feb 2019 is unchanged, there being no central portal for open data in the Scottish public sector or even for Scottish Government. Searching the main Scottish Government website for open data yields 633 results, none of which are links to data on the first four screenfuls. I didn’t go deeper than that.

The Scottish Government’s Statistics Team have a very good portal with 295 Data Sets from multiple organisational-providers. This is up by 46 datasets on last year and includes a two new organisations: The Care Inspectorate and Registers of Scotland. The latter, so far (Aug 2020), has no datasets on the portal.

There are some interesting new entrants into the list of those parts of Scottish Government publishing data such as David MacBrayne Limited which is, I believe, wholly owned by SG and is the parent, or operator of Calmac Ferries Limited. On 1st March 2020 they released a new data platform to get data about their 29 ferry routes. This is very welcome. After choosing the dates, routes and traffic types you can download a CSV of results. While their intent appears to be to make it Open Data, the website is copyright and there is no specific licensing of the data. This is easily fixable.

It is also interesting to contrast Transport Scotland with work going on in England. Transport Scotland’s publication scheme says of open data “Open data made available by the authority as described by the Scottish Government’s Open Data Strategy and Resource Pack, available under an open licence. We comply with the guidance above when publishing data and other information to our website. Details of publications and statistics can be found in the body of this document or on the Publications section of our website.” I searched both without success for any OD. Why not say “we don’t publish any Open Data”? Compare this complete absence of open data with even the single project Open Bus Data for England. Read the story here. Scotland is yet again so far behind!

Summary

In the review of data I’ve shown that little has changed in 18 months. Very few branches of government are publishing open data at all. The landscape is littered with outdated and forgotten statements of good intent which are not acted on; broken links; portals that vanish or don’t work; out of date data; yawning gaps in publication and so on.

The claim of “Open By Default” in the current (2015) Open Data Strategy is misleading and mostly ignored with consequence. The First Minister may frequently repeat the mantra of “Open and Transparent” when speaking or questioned by journalists, but it is easily demonstrable that the administration frequently act in the directly opposite way to that.

The recent situations with Covid-19 and the SQA exams results show Scotland would have found itself in a much better place this year with a mature and well-developed approach to open data: an approach one might have reasonably expected after five full years of “open by default”.

The social and economic arguments for open data are indisputable. These have been accepted by most other governments of the developed world. Importantly, they have also been taken up and acted on by developing nations who have in many cases overtaken Scotland in their delivery of their Open Government plans.

The work I have done in 2019 and in this review is not a sustainable one – i.e. one single volunteer monitoring the activity of every branch and level of government in Scotland. And the methodology is limited to what is achievable by an individual.

A country which was serious about Open Data would have targets and measures, monitoring and open reporting of progress.

- It wouldn’t just count datasets published. It would be looking at engagement, the usefulness of data and its integration into education.

- It would fund innovation: specifically in the use of open data; in the creation of tools; in developing services to both support government in creating data pipelines, and in helping citizens in data use.

- It would co-develop and mandate the use of data standards across the public sector.

- It would develop and share canonical lists of ‘things’ with unique identifiers allowing data sets to be integrated.

- It would adopt the concept of data as infrastructure on which new products, services, apps, and insights could be built.

I really want Scotland to make the most of the opportunities afforded by Open Data. I wouldn’t have spent ten years at this if I didn’t believe in the potential this offers; nor if I didn’t have the evidence to show that this can be done. I wouldn’t be giving up my time year-on-year researching this, giving talks, organising groups and creating opportunities for engagement.

What is fundamentally lacking here is some honesty from Scottish Government ministers instead of their pretence of support for open data.

Ian Watt

20 August 2020

Link to an index of pieces I have written on Open Data:

http://watty62.co.uk/2019/02/open-data-index-of-pieces-that-i-have-written/

Answer to quiz

Scotland is B, in the centre. Kenya is A, and Romania C.

I could have chosen Mexico, Honduras, Paraguay, Uruguay – or others. All are doing better than Scotland.

Header Image by David Ballew on Unsplash.